My Research

My research focuses on large language models (LLMs) and their application as agents. Currently, my interest lies in enhancing the use of long context within LLMs, alongside evaluating and improving inter-agent communication. Additionally, I am keenly interested in exploring the application of LLMs for broader societal benefits.

LLM & NLP

Large language models (LLMs) like OpenAI’s GPT series have revolutionized the field of natural language processing (NLP) by demonstrating an impressive ability to understand and generate human language. A key aspect of these models is their reasoning ability, which is a subject of growing interest and investigation. I am particularly focused on exploring the reasoning capabilities in LLMs. This includes understanding the mechanisms that facilitate reasoning within these models, assessing the extent to which LLMs are capable of conducting reasoning processes, and discerning between genuine reasoning and the mere mimicking of patterns observed in pre-trained data.

LLM-based Agent and Multi-agent System

Artificial Intelligence (AI) aims to emulate Human Intelligence (HI) in combining basic skills to address complex tasks. AI agents is an especially important step the development of AI, which should integrate expert models and external tools for solving intricate problems, a step towards achieving Artificial General Intelligence (AGI). Large Language Models (LLMs) demonstrate notable capabilities in learning and reasoning and are proficient in employing external models, tools, plugins, or APIs for complex problem-solving. LLM-based agents are essentially LLMs enhanced with access to these additional resources.

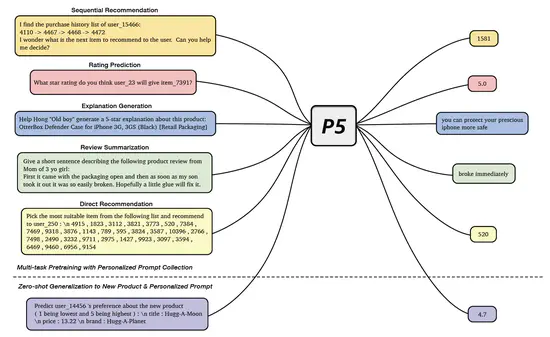

LLM for Recommender System

The integration of foundation models like Large Language Models (LLMs) into recommender systems (RS) marks a significant advancement in the field. Adapting LLMs to recommender systems that manage billions of users and items presents a complex yet crucial challenge. This exploration delves into the benefits and potential issues of utilizing LLMs within recommender systems, contributing to advancements in this area.

Formal Linguistics and Computational Linguistics

Formal linguistics is a branch of linguistics that focuses on the study of language using formal methods derived from mathematics and logic. It aims to understand the underlying structure of language by constructing precise, well-defined models of its syntax, semantics, and phonology. The key aspects of formal linguistics include (1) Syntax – This involves the study of the rules and principles that govern the structure of sentences. Formal syntactic theories explore how words combine to form grammatical sentences and the underlying rules that govern these combinations. (2) Semantics – This aspect deals with the meaning of words, phrases, and sentences. Formal semantics seeks to represent and analyze the ways in which linguistic expressions can convey different meanings in different contexts. (3) Phonology – This is the study of the sound systems of languages, including the rules for combining sounds into meaningful units or words. Formal phonology models the abstract sound structures of language and their functional roles.